‘Alexa, add this song to my running playlist.’ I bet you said or heard someone saying something similar at least once in your life, didn’t you? Artificial intelligence is not only part of more or less every industry nowadays, as customer support is now a field where chatbots are replacing their human counterparts: it is also part of our everyday lives, as virtual assistants such as Amazon’s Alexa and Apple’s Siri are colonising our homes and indulging in data harvesting. As if this wasn’t enough of an issue, here comes another one: many AI programs are overly feminized, and this can make gender stereotypes and unconscious bias less easy to eradicate.

How often do you see masculine or genderless AI? Exactly, now you got it. Why? Well, as you’ll find here, more or less 90% of the developers working on AI-creation are men: this may not be the only reason for this feminization process, but it clearly influences it and it makes change less easy to take place. AI, just as everything else, is the product of the people who creates it, and of the data they receive. Unconscious or purposeful biases can lead, as is the case here, to the attribution of feminine qualities to roles where women are traditionally more involved, such as service-oriented positions like secretaries and customer reps: programmers just don’t picture men in those positions, and this clearly reinforces the stereotype that women are better suited for administrative and service-oriented tasks.

Discrimination against women also stems from the dominant male-unless-otherwise-indicated approach, which has created a gender data gap, resulting in a pervasive yet invisible bias that has a big impact on women's lives: male data is nowadays the majority of what we know and what is male is portrayed as universal, whereas women are shown as an invisible minority. What is striking is the absence of ethical codes and regulatory frameworks guiding the development and use of these technologies, which raises major concerns about the protection of vulnerable sectors of the society who have to deal with many biases based on gender, religion, age, ethnic origin, political and sexual orientation.

Moreover, due to gender stereotypes that portray women as more altruistic, passive and docile, users interact better with a female voice assistant, and even chatbots have a feminine profile. Femininity is also used to make sure AI is not seen as something to be afraid of: women are considered more caring and empathic than men, which makes them less scary and thus orients the users’ response to surveillance capitalism trying to distract us from the market logics in the platform economics and obfuscate the modes of surveillance.

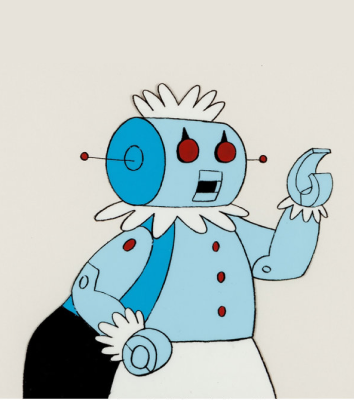

That’s why a feminine voice is more helpful in the pursuit of AI adoption, thus furthering the feminization of AI. Alexa, Google’s Home and the others also have disturbingly tame and playful pre-established responses to verbal abuse: even though companies may find it helpful to have an AI that avoids pugnacity, connecting submissiveness to gender is not helping women and equality rights movements. And it’s definitely not helping feminine chatbots and robots as well: they come across the same harassment and verbal violence that real women have to deal with everyday. Sophia, a humanoid "social robot" that can mimic social behavior and induce feelings of love in humans, as well as Alexa and all other virtual assistants and chatboats, became the target for the sort of sexist insults and harassment real women often have to endure both in real life and online.

Ludwig’s wrap-up

Our culture is imbued with the feminization of AI in order to meet the demands of surveillance capitalism, and this raises serious concerns for programmers and AI professionals. The development and use of these technologies comes with ethical, legal, and human rights concerns for users: hiring more women and non-binary individuals may result in a reduction of the conscious and unconscious biases against both men and women. Developing a gender-neutral voice for our AI chatbots and robots, or imposing a default gender voice, with an equal probability either a female or a male, could help moving away from the female gendering of AI and stop the perpetuation of this benevolent sexism.